Beyond Naked editor Lisa Cooper burning midnight oil with FCPX.

Beyond Naked editor Lisa Cooper burning midnight oil with FCPX.

OK so you’ve got your Events all nicely structured after reading part i, part ii and part iii of this post. And now you’re wondering: what’s the right way to assemble a feature-length documentary film in Final Cut Pro X? That’s what Shane Tilston recently asked me in this question:

Did you work with just one project file for the whole film? If so, when you disabled certain events, did you just live with the “media missing” red notation? Or – did you use multiple projects for different scenes, and then combine them? If you did that, how did you combine them? I haven’t played around with that yet, but I’ve heard that’s tricky.

It is tricky! But the idea is simple: cutting a feature-length film is like loading a train. Have you ever seen trains load on a track? First the engine connects to one car (the first sequence), then a second is hooked up, and then the engine pushes the whole thing into a third one, connecting it. At some point in the process the engine may pull the entire assembly forward until passing a switch in the track, which is thrown, allowing the cars to be pushed down a different track to connect with different cars. It’s a tedious process, but eventually all the cars are connected to the train, which jubilantly toots its horn, and chugs away.

In Final Cut Pro X terms, each car is a Project, also called a sequence, in your film. Each sequence is composed of scenes, and the scenes are composed of shots. (Sometimes a single long scene is a sequence all to itself – but usually a sequence contains more than one). When I started out, this was a little mysterious to me. How do I know what to put into a sequence? How do I know where one sequence ends and another begins?

This is why developing a preliminary structure for your film is incredibly helpful at the beginning. We used sticky notes to write down key points and elements of story that we knew had to be in there somewhere. We stuck them to a big piece of white foam board. Then, we started to see things that belonged together, so we moved them next to the others. Eventually the stickies covered many white boards. As the structure came together, we used color-coded stickies to represent items that would go into the same sequence.

Then, you make adjustments to this board as you progress. Here’s an example. In the shot below, the board along the wall is dedicated to figuring out how the sequences should be fit together (the order of the cars in the train). Beneath that, you can see that each sequence has its red note, and yellow notes below that indicate which scenes are included, and their order.

The most important thing about this is that it is going to constantly change, especially in the beginning. But all through the process, up until “picture lock,” you can and will be moving things around to make them fit better. This was an exceedingly difficult process for me. Lisa, my creative partner, is much more organized than me, so I was able to depend on her for much of it, and that was indispensable. So if things stuff doesn’t come easily to you, find someone to collaborate with who can manage it. It’s essential.

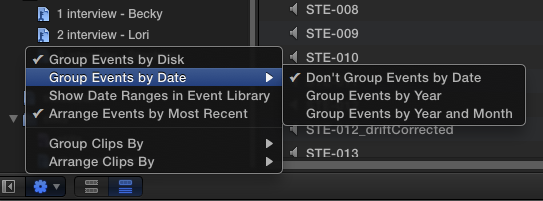

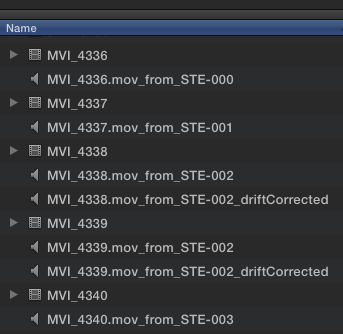

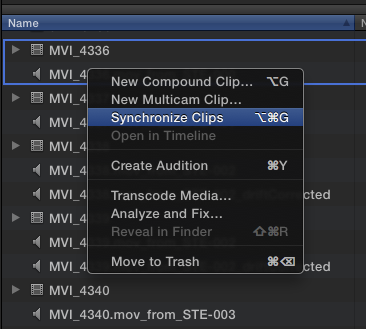

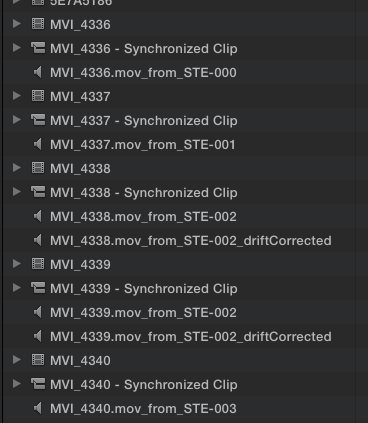

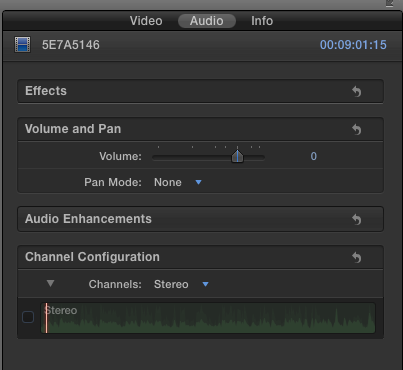

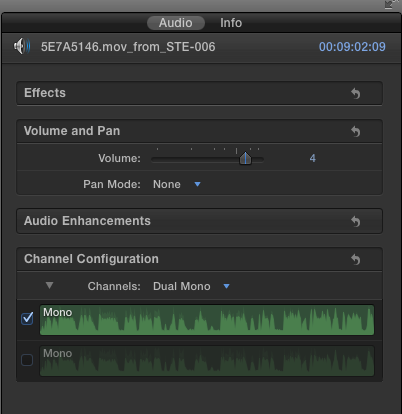

So to sum up: we created a separate project file for each sequence in the film. From a technical standpoint, another reason for doing this is that it allows you to load only those Events that you need for the given sequence, instead of having to load all the Events in the film. In our case that was 2.5 terabytes of footage, which brought our 2011 iMac to its knees when we tried to open all Events simultaneously. Even with a super fast computer, the best case is that FCPX will behave erratically when you load too many Events. The secondary reason for doing this is it just allows you to work faster: you can put your finger on the footage you’re looking for much more quickly if you have three Events open than if you have 30.

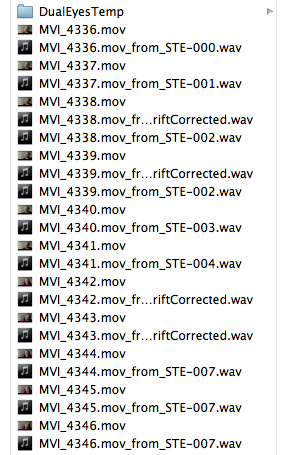

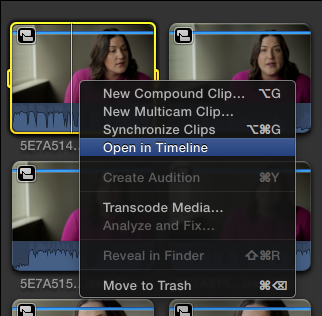

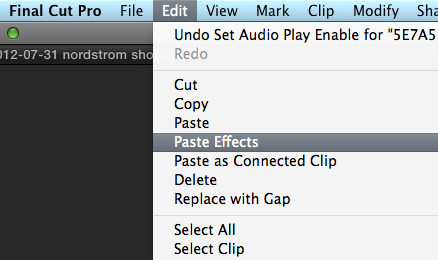

As you progress further into the edit, you will have more and more sequences finished. Our film had 34 sequences in the end (including credits as the 34th sequence). When we got to the point where we were ready to put them all together into the “first assembly,” we shared each sequence as a master file, created a new Event called “Screener,” and imported each file into that Event.

Then we created a new project called “Assembly 1″ and dropped all the screener files, in order, into that. This is quicker if you title each exported sequence with it’s number first, ie., “01 opening sequence” and “02 Fremont Dawn,” etc. Then, we exported it, and viola, we had our first assembly. Grab a big notebook, and watch the first assembly, taking notes at the places you think aren’t working. Then, reopen those individual sequences and keep working. When you’re feeling good, make another assembly. Repeat. Again. And again. We repeated this process a dozen times or so, before we got to locked picture, where you get to write a sticky that looks like this:

One thing that’s a little awkward about this process is that some sequences will have music playing between them, or other transitional sound or picture elements. In that case, it may make sense to combine two sequences into one. In our film, there were a couple of sequences where this would have resulted in us having too many Events open, so we waited to lay in the connecting elements for those two sequences until the final assembly.

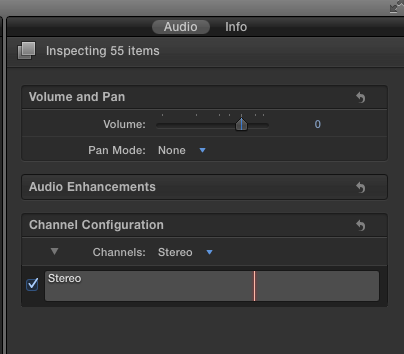

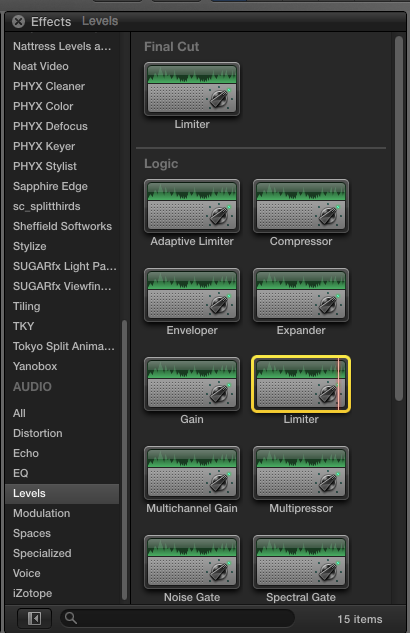

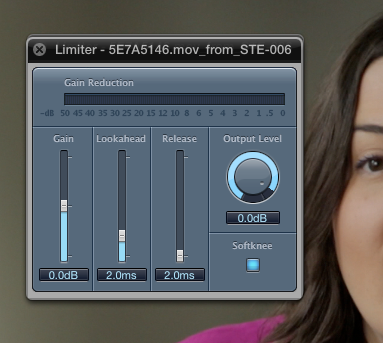

We did all of the color correction and first pass audio mixing inside each of the sequences, not in the assemblies. So that in the end, the assemblies only contain the master files, and in the case of the final assembly, two tracks of music that was used to bridge the two sequences mentioned above:

In the screen capture above, note the orange chapter markers. You can see where I’ve combined several sequences into one for ease of editing as we progressed in the process. The orange chapter markers reveal what earlier in the project was an individual sequence. These markers are there for easy navigation on final DVD, as well as for your own navigation, because you will need to replace individual sequences as you make final color corrections, audio edits, or editorial changes and having them makes it a snap to locate.

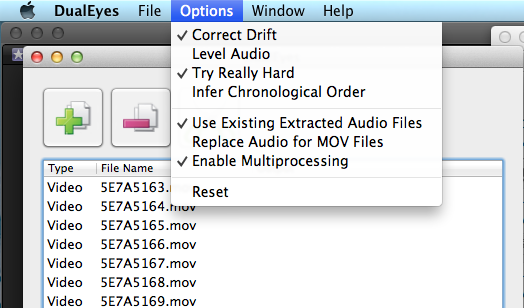

OK so let’s go back to where things are tricky. On FCPX, you can’t have an entire documentary feature-length film worth of Events open at once. We couldn’t, at least. So you have to selectively move events and projects in and out of where FCPX can see it, and restart to load only the stuff you need and ignore what you don’t. This is a pain in the ass. It’s the worst thing about FCPX. But it’s the price we pay for the wings that it gives us to fly over everything else. Lisa and I spend an insane amount of time moving folders around. We had dreams at night about moving folders into the wrong place and not being able to find them later. Until we discovered Event Manager X. I won’t say anything else about it here, since I’ve already raved about it.

That’s pretty much it. I’m sure there’s some things I’ve missed, so please ask questions and I’ll continue to flesh out this post in response.